Causation is a real relationship between features that exists independently of our awareness of them. Features C cause features E if, when all the features that make up C are present, E is present, under all conditions where the background features (B) required for the causal links are present. In other words, if we make a change to the world in such a way that C becomes present (while maintaining B), then as a consequence E will always be present. Causation is an implication between two non-overlapping sets of features that holds regardless of the state of irrelevant background features.

In this post we will consider different types of causes. We will then look at types of bias that prevent us from determining the true causal relationship. Finally we'll look at how to determine the true causal relationship in spite of confounders.

Part 1. Types of Causes

Cause and effect

With respect to a causal relationship, all features can be divided into four categories:

- C: the cause

- E: the effect

- B: background features required for the causal links

- I: irrelevant background features.

Here, whenever the cause is present, the effect is present, regardless of any background variables.

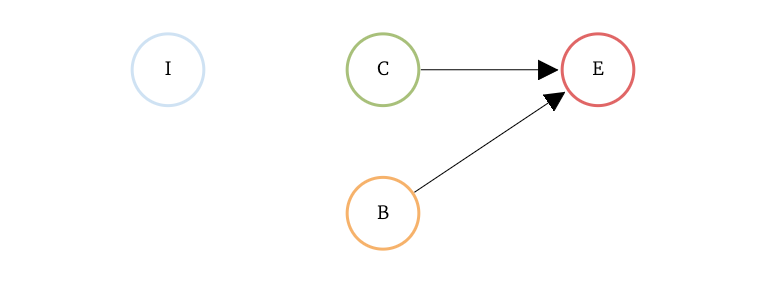

Cause with relevant background

Often for the implication relationship between cause and effect to hold, background features have to be in particular states.

Here, there is one relevant background feature B. The causal relationship between C and E only exist when B is in a particular state. In this case, there is only a single relevant background variable, and the link between C and E exists when B is present.

If C and B both have to be present, why do we call one the cause and the other background? When we think of 'the cause' of an effect, we usually think of the feature that changed its value. The unchanging background is required for the causal relation, but when it is static we seldom think of it as a 'cause'.

There are several ways we can think about the causal relationship. We could think of the value of E being a deterministic function of C and B.

However, because the value of \(E\) causally depends on \(C\) and \(B\), it might be more useful to think of the relationship as the assignment operation

This representation also seems insufficient. If we have an effect that can be brought about by multiple causes (see 'Overdetermined Effect' below), then if we were to take the assignment operator view, we would need to have all possible causes of \(E\) to be in the function. If we were to discover a new cause of \(E\), we would have to rewrite the function. For this reason, it might be better, in the case where the effect \(E\) is a binary feature, to think of the causal relationship being the implication relationship.

We can understand this to mean that if the output of the function \(f\) is true, then \(E\) will be present. If \(f\) is not true, we don't know the state of \(E\). Something else might have caused it to be true. In other words, those values of \(C\) and \(B\) that \(f\) maps to the state of \(E\) being present are sufficient for \(E\), but perhaps not necessary.

Causal Chain

When we say that C causes E, often there are intermediate features that totally mediate the causal relation, such that if those intermediate features were somehow blocked, the causal link would be broken. We can expand the relationship from C to E in a causal chain containing multiple causes.

- C1: Finger pressed down on piano key

- C2: Key lowers

- C3: Hammer strikes string

- C4: String vibrates

- C5: Air molecules vibrate

- C6: Pressure waves radiate out from string

- C7: A particular set of hair cells in my ear vibrate

- E: I hear the sound

In this example, all the features are binary valued. That is, they either were present or absent, they either happened or they didn't happen. And the causal relationships are implications. For every link in the chain, if the antecedents C and B are present, then the consequent will be present.

We can say that C1 caused E because it was the first cause in a causal chain connected to E. We can repeatedly press down the key and subsequently observe E. C1 implies E. We can change many irrelevant background features I and C1 would still imply E. For instance, I can raise my foot, and the causal implication would still hold. My foot being raised is a feature that does not change the causal relationship. However, there are background features B that would break the links in the causal chain if they were set to particular values. For instance, one of the features in B4 is air being present around the strings. If I sucked the air out, then B4 wouldn't be present, and it would break the link between C4 and C5.

Overdetermined Effect

We would like to say that "C caused E" means that if we had prevented C, E would not have occurred. This counterfactual theory of causation resonates with our intuition. However, this means that C was necessary for E, or in other words, E would not have been possible without C. If multiple causes can bring about E, then preventing any individual cause would not stop E from happening, so no particular cause \(C_1\) is necessary for E.

We can resolve this by saying that the causal relationship is an implication that holds in general, so that a particular cause C is sufficient to bring about E (C implies E). In a particular case when multiple sufficient causes are present, the counterfactual condition ($\lnot C \implies \lnot E $) doesn't hold, but we could bundle all the causes into a single causal variable, and then the counterfactual condition \(\lnot (C_1 \lor C_2) \implies \lnot E\) would hold.

- C1: A lit match is thrown into a pile of dry hay in a barn

- C2: Lightning strikes the pile of dry hay at exactly the same time

- E: The barn burns down

C1 and C2 together caused E, and each was sufficient for E on its own. But determining which one was the ‘real’ cause doesn’t make sense. Intuitively, both caused E. We can bundle them together ($C3 := C1 \lor C2$) and say if C3 hadn't happened, E wouldn't have happened.

Feedforward Inhibition

If a causal relationship exists between C and E when B is in a particular state, but C causes B to move away from that state, it can have the effect of preventing E from happening. This case can be counterintuitive, because C can both be though of as something that potentially causes E and something that prevents E from happening simultaneously.

- C: Enemy places a bomb outside Suszy's room

- B: Billy disables the bomb

- E: Suzy lives

If the background variable B had been such that the bomb was active, then Enemy would have caused Suzy's death (C -> E). Billy saved Suzy's life by disabling the bomb (B -> E). Also, Enemy placing the bomb caused Billy to disable it (C -> B). Because causation is transitive, it seems as though Enemy placing the bomb caused Suzy to live. This seems absurd. What is going wrong here?

If we think about the bomb being active as the background variable B that is required for C to cause E, but C causes B to be in a state where C no longer causes E, then the direct link from C to E is no longer present. So the Enemy placing the bomb no longer would have caused Suzy to die. The key is to realize we are doing two counterfactual analyses. In the first, the bomb is active, and Billy isn't around. Then C implies E. If C hadn't happened, E wouldn't have happened. In the second analysis, we are counterfactually varying B, while C is present. If Billy didn't disable the bomb, Suzy would have died, so Billy disabling the bomb causes Suzy to live.

Usually when we talk about the cause of something, we mentally hold all the background features other than the one under analysis constant, and we mentally modify the value of the feature under analysis and infer what the value of E would have been. We have to be careful about which features we are holding constant and which ones we aren't when we call something a 'cause'.

- C: A boulder slides down a mountain towards a hiker

- B: The hiker ducks

- E: The hiker lives

This example can be analyzed the same way as the bomb example. The hiker would have died if she hadn't ducked, but that background feature (her ducking) was changed by C. If we account for B, then C isn't a cause of E.

Stochastic Relationship

If the deterministic relationship between C and E depends on the state of a hidden background feature B, then if we observe C we won't be able to deduce the value of E with certainty. Rather, the value of E now becomes a stochastic function of C. Its value has a probability distribution conditioned on C: \(p(E|C)\).

- C: The door of your garage is open

- B: It is raining

- E: Your widget stops working

Suppose that your widget stops working whenever it is raining outside and you leave the garage door open. However, you are oblivious to the fact that there is any relationship between the rain and your widget breaking. But you do notice that your widget only stops working when the garage door is open. Suppose where you live it rains 25% of the time. Then you would say, with 0.25 probability that when your garage door is open your widget breaks.

The relationship between B, C, and E is deterministic. But because you are lacking knowledge, that uncertainty creates a stochastic relationship. Formally, you are integrating over the values of B to get the marginal relationship between C and E.

Part 2. Types of Bias

Post Treatment Bias

Conditioning on a mediating cause (C2) between a cause (C1) and and effect (E) can make it seem like there is no causal relationship between C1 and C2 when in fact there is one.

- C1: Celebrating a person's birthday

- C2: That person's happiness

- E: Their productivity at work

Suppose that if you celebrate someone's birthday at work, they become more happy, and this causes them to be more productive at work. You don't know this however, so you'd like to analyze the relationship between C1 and E.

You know that happy people are more productive, and you don't want happiness to counfound your analysis (darn happiness!), so you control for happiness. To do this, you measure happiness after people's birthdays and if they aren't happy you throw them out of the analysis. Now, after this conditioning on happiness, all the happy people are equally productive, so you find that productivity is independent of birthday celebrations. But we know it isn't, so what went wrong?

You've conditioned on a mediating variable. This means the only causal relationship you would ever see between C1 and E is if there was some causal relationship that didn't go through C2. By conditioning on a causal mediator, you block the effect between the cause and the effect.

Collider Bias

Also called 'explaining away' and 'selection bias'

When two causes of an effect have no direct causal relationship between them, a correlation can be created by conditioning on the effect. In this case, the effect is called a collider because the two causes collide on the effect. Conditioning on a collider induces a correlation that often can trick you into thinking there is a direct causal relationship between the variables.

- C1: Light Switch Up

- C2: Power On

- E: Light bulb on

The light bulb will only turn on if the light switch is up and the power is on. If either of these two things is false, E will be false.

C1 and C2 are causally independent. However, if you are able to observe E, then C1 and C2 become correlated. There are two ways to condition on E: 1) observing the light on and 2) observing the light off. If you observe the light on, you know C1 and C2 are both true. If you observe the light off, you know that at least one of the two causes if false. If you observed the lightbulb over a hundred days and conditioned on it being off (threw out the points where it was on), there would be a negative correlation between C1 and C2. When the light switch was down, the power was more likely to be on. When the power was off, the light switch was more likely to be up.

- C1: Athleticism

- C2: GPA

- E: Responded to Survey

A university sent out a survey to all of its alumni. Alumni who participated heavily in university sports were more likely to respond. Separately, alumni with high GPAs were more likely to respond. There was no correlation between athleticism and GPA among the alumni, but when we looked among the survey responses, there was a strong negative correlation. This is because we've conditioned on a collider, whether an alumnus responded. This phenomenon - correlation caused by conditioning - is extremely common.

Example modified from Probabilistic Graphical Models, Koller and Friedman (2009) ex. 21.3

Common Cause

If a cause (C) has multiple effects (E1 and E2), those effects will be correlated. This can suggest a causal relationship between E1 and E2 when there isn't one.

Part 3. Deconfounding

Controlled Experiment

If you have two entities, or units, and they are exactly the same in every regard, except that C is different between them, then any observed differences in the value of E must be due to the causal relationship between C and E. By holding the values of all the other features constant, you have isolated the relationship between C and E, given that particular state of background variables.

That's the idea behind the controlled experiment. You have two entities, or two groups of entities. One you call the control group, and one you call the experiment group. All values of B are the same between groups, and C is set to be different between groups. Since you controlled/conditioned on all B features that could play a role in the causal relationship, then you know that the change in C caused the change in E.

If you are the one doing the manipulation of C, then if you assume your manipulation of C was not causally influenced by any feature that was different between the groups, or any feature causally upstream (formally: D-separated), then you can know the arrow of causation goes from C to E. However, if you only observe that C and E are the only features that differ in value between the two groups, you can't know the direction of causation. If C and E are binary valued, causation is the implication relationship, so we can only determine the direction of causation if we ever observe one feature without the other. For instance, if we observe E without C on occasion, then we can infer that C causes E, and that something else causes E sometimes. We can rule out that E implies C because we observed E without C.

Once you know the causal relationship between C and E when all background variables are set to a particular state, does that knowledge generalize if we change a background variable? If we limit our context to only the controlled experiment itself, then we cannot generalize at all. In fact if we do our experiment in the basement, we don't even know if the same causal relationship would hold if we reran the experiment upstairs. This catastrophic failure to generalize is a huge limitation of a pure controlled experiment approach. We don't even know which variables to include in B and which to include in I, which is why we controlled for everything. In practice, when scientists run controlled experiments, they do so with a large corpus of knowledge on causal relationship, and have a good sense for which variables are important, which ones aren't, which enables them to make claims about when the results of an experiment would generalize and when they wouldn't. Unfortunately, the process by which they generalize is poorly understood and not yet formalized.

Back-door adjustment

Also called 'standardization'

Conditioning on the common cause will reveal the true causal relationship between C and E.

To get the average causal effect, control for B, measure C->E, then average C->E over all values of B as they appear in the population.

Stratification is a kind of conditioning, where you condition on sets of values background features and look at the causal effect for each set.

Front-door adjustment

To determine the causal impact of C1 on E, find the causal impact from C1 to C2, and the impact from C2 to E, and combine those impacts.

Randomized Experiment

If you randomly assign units to the control and treatment groups, then the background variables (B) should be evenly distributed between the groups. This means when you measure the difference between E in the two groups, you are measuring something close to the average causal effect if you randomly selected a unit.