This post presents a simple framework for explaining why a controlled experiment generates a good estimate of the causal effect a treatment has on a response variable, and under which conditions an observational study can provide the same information.

Probability arises from uncertainty

Let's consider a simple system, with three variables \(X\), \(C\), and \(Y\). This is a Bayesian network that shows how each one of the variables is set.

\(C\) is the treatment variable, \(Y\) is the response variable, and \(X\) is a covariate that both affects \(C\) and \(Y\). The relationship we are trying to understand is how \(C\) influences \(Y\).

Importantly, let's suppose that \(Y\) is a deterministic function of \(X\) and \(C\). Many interesting systems are deterministic. Often we don't know what the values of the variables are that determine the response variables, or even what those variables are. But in this simplified example, suppose we know that Y is perfectly determined by C and X.

For the rest of this post, we will think of the deterministic function as a conditional probability distribution \(P(Y | C, X)\), where all the mass is concentrated in one value of \(Y\) for a particular state \((C, X)\). This will enable us to use probability calculus.

If we knew what this function was, our problem of determining the causal effect of \(C\) on \(Y\) would be solved. We don't know the function, but our goal isn't to discover this function, it's to determine, for a population of units that have some distribution over \(X\), what the causal effect \(C\) has on \(Y\). In other words, if, for a unit with \(X\) sampled from the population, what is the distribution over \(Y\) if we apply treatment \(C = c_0\), and what is the distribution over \(Y\) if we apply treatment \(C = c_1\). Since the units are the same with respect to \(X\), these two distributions are the same as the two population distributions over Y for each treatment.

Although we don't know what the deterministic function \(f(C, X)\) is, we can use it to state our desired distribution. For each level of \(X\), the function outputs two values of \(Y\), one for each treatment. These are the two potential outcomes. To get the two distributions of \(Y\) for all levels of \(X\), we take the weighted average of this distribution over all X.

Once we perform this marginalization over \(X\), we are left with two distributions, one that gives us the distribution of \(Y\) if we apply treatment \(c_0\) to an arbitrary unit, and one that gives us the distribution of \(Y\) if we apply the treatment \(c_1\). From the potential outcomes literature, these two distributions can be represented as \(Y^{C=c_0}\) and \(Y^{C=c_1}\) respectively, but to emphasize the fact that our target distributions depend on the distribution of \(X\) in our population, we will keep the more verbose notation $ \sum_X P(X) P(Y|C,X) $ to represent these distributions.

Observational studies

In an observational study, we can calculate the distribution of \(Y\) conditioned on \(C\), but this doesn't give us $ \sum_X P(X) P(Y|C,X) $ in general. To see this, let's use probability calculus to expand \(P(Y|C)\)

This is almost exactly the same as our target distribution, but in general

because

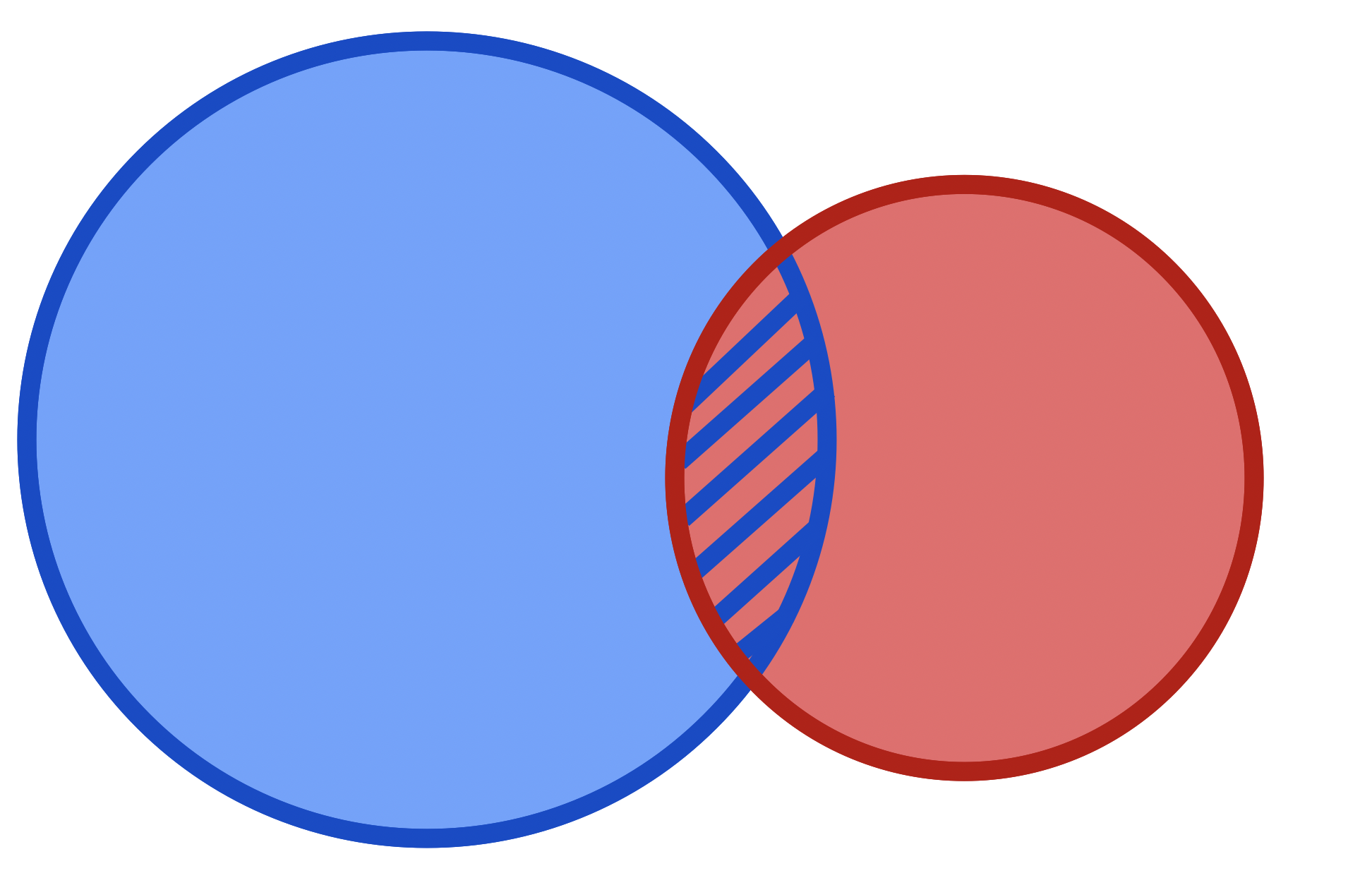

This means we can't use this observed conditional probability distribution. The problem is that \(X\) is not independent of \(C\), because in the observational study there is a backdoor path leading from \(X\) to \(C\).

This is worth discussing in more detail because it is so important. \(X\) is a variable that directly sets the value of \(Y\). If the distribution of \(X\) is not the same in all the treatment groups, then it is impossible to determine if the observed differences in \(Y\) are due to our treatment \(C\), or if they are due to \(X\).

On the other hand, if the distributions of \(X\) are the same in the different treatment groups, then we can conclude that the treatment \(C\) must have been the cause of the observed differences in \(Y\), because everything else was the same.

If \(X \perp C\), we say that the treatment groups are exchangeable.

There is a simple fix to this, so we can obtain our goal of estimating $ \sum_X P(X) P(Y|C,X) $. If we condition on every level of \(X\), then we would be able to estimate the deterministic function \(P(Y | C, X)\). Then, we could just perform the weighted average of this function, over the distribution of \(X\) of the population of interest. In practice this is difficult because we often don't know what the values of \(X\) are (they are latent variables). Even worse, we don't know which of the possible covariates \(X\) go into the deterministic function \(Y = f(\cdot)\).

Randomized Experiments

In an experiment, we know the mechanism of treatment assignment, and although this post is set in a deterministic universe, because the assignment is determined by the output of a pseudorandom number generator, we know that assignment could not possibly be causally downstream of \(X\). Because the experimenter is setting the assignment, the link between \(X\) and \(C\) is severed. This means that

If we start with the observed conditional distribution \(P(Y|C)\) and expand it in a similar way we did with the observational study, except that now we can utilize the independence between \(Y\) and \(C\), we get:

This is exactly our target expression. This means that in a randomized experiment, the target distribution can be obtained simply by reading off \(P(Y|C)\), the distribution of Y for each treatment.

The beautiful thing about randomized experiments is that this works even if you don't know which covariates go inside \(Y = f(\cdot)\). Since the assignment is not causally downstream of any covariates that matter, the distribution of those covariates, no matter how many there are or the functional form of \(f(\cdot)\), will be the same in all the treatment groups, as long as the sample size is large enough.